stderr log/postgresql.log

csvlog log/postgresql.csv

PG主从部署

1. 下载安装包

各个版本可以从 https://www.postgresql.org/ftp/source/ 下载

本次选用pg13.6版本

2. 部署主库步骤

解压,编译

tar zxvf postgresql-13.6.tar.gz

mkdir -p /data1/pg5432/{pgdata,archive,scripts,backup,soft,conf,logs}

mkdir /usr/local/postgresql-13.6

yum install readline* systemd-devel zlib* python-devel python3-devel -y

./configure --prefix=/usr/local/postgresql-13.6 --with-python --with-systemd

make && make install

创建依赖的用户配置环境变量

groupadd postgres

useradd -g postgres postgres

chown -R postgres.postgres /data1/pg5432

su - postgres

vim .bash_profile

## 新增

export LANG=en_US.UTF8

export PGPORT=5432

export PGDATA=/data1/pg5432/pgdata

export PGHOME=/usr/local/postgresql-13.6/

export LD_LIBRARY_PATH=$PGHOME/lib:/lib64:/usr/lib64:/usr/local/lib64:/lib:/usr/lib:/usr/local/lib:$LD_LIBRARY_PATH

export PATH=$PGHOME/bin:$PATH:.

export DATE=`date +"%Y%m%d%H%M"`

export MANPATH=$PGHOME/share/man:$MANPATH

export PGHOST=$PGDATA

export PGUSER=postgres

export PGDATABASE=postgres

初始化数据库

initdb -D /data1/pg5432/pgdata -E UTF8 --locale=en_US.UTF8 -U postgres

修改配置文件

postgresql的配置文件要包含自定义的配置文件

echo "include '/data1/pg5432/conf/my.conf'" >> postgresql.conf

touch my.conf

wal_level= replica

archive_mode=on

#archive_command='test ! -f /postgresql/archive/%f || cp %p /postgresql/archive/%f'

listen_addresses = '*'

max_wal_senders=10

wal_sender_timeout=60s

#primary_conninfo = ''

max_replication_slots=10

wal_log_hints=on

## loggile

log_destination = 'csvlog'

logging_collector = on

log_directory = '/data1/pg5432/logs'

log_filename = 'postgresql-%Y-%m-%d_%H%M%S.log'

log_file_mode = 0600

log_rotation_age = 1d

log_rotation_size = 512MB

log_min_messages = info

log_min_duration_statement = 60

log_checkpoints = on

log_connections = on

log_disconnections = on

log_duration = on

log_lock_waits = on

## log DDL ##

log_statement = 'ddl'

data_directory='/data1/pg5432/pgdata'

hba_file = '/data1/pg5432/conf/pg_hba.conf'

ident_file = '/data1/pg5432/conf/pg_ident.conf'

unix_socket_directories = '/data1/pg5432/pgdata'

max_connections = 2000

shared_buffers = 8GB

effective_cache_size = 24GB

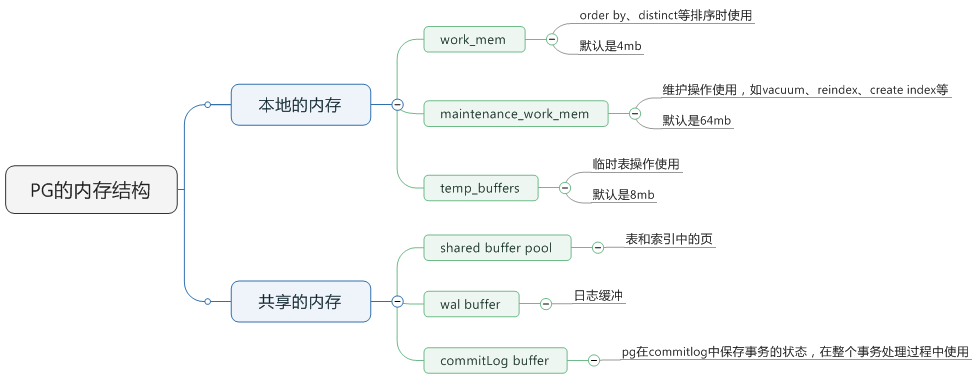

maintenance_work_mem = 2GB

checkpoint_completion_target = 0.9

wal_buffers = 16MB

default_statistics_target = 100

random_page_cost = 1.1

effective_io_concurrency = 200

work_mem = 1048kB

min_wal_size = 1GB

max_wal_size = 4GB

max_worker_processes = 32

max_parallel_workers_per_gather = 4

max_parallel_workers = 32

max_parallel_maintenance_workers = 4

日志配置:

log_destination

参数说明:openGauss支持多种方法记录服务器日志,log_destination的取值为一个逗号分隔开的列表(如log_destination="stderr,csvlog")。

该参数属于SIGHUP类型参数,请参考表1中对应设置方法进行设置。

取值范围:字符串

有效值为stderr、csvlog、syslog。

取值为stderr,表示日志打印到屏幕。

取值为csvlog,表示日志的输出格式为“逗号分隔值”即CSV (Comma Separated Value)格式。使用csvlog记录日志的前提是将logging_collector设置为on,请参见使用CSV格式写日志。

取值为syslog,表示通过操作系统的syslog记录日志。openGauss使用syslog的LOCAL0 ~ LOCAL7记录日志,请参见syslog_facility。使用syslog记录日志需在操作系统后台服务配置文件中添加代码:

local0.* /var/log/postgresql

默认值:stderr

logging_collector

参数说明:控制开启后端日志收集进程logger进行日志收集。该进程捕获发送到stderr或csvlog的日志消息并写入日志文件。

3. 配置开机自启

cat << EOF >> /usr/lib/systemd/system/postgresql-13.service

# It's not recommended to modify this file in-place, because it will be

# overwritten during package upgrades. It is recommended to use systemd

# "dropin" feature; i.e. create file with suffix .conf under

# /etc/systemd/system/postgresql-13.service.d directory overriding the

# unit's defaults. You can also use "systemctl edit postgresql-13"

# Look at systemd.unit(5) manual page for more info.

# Note: changing PGDATA will typically require adjusting SELinux

# configuration as well.

# Note: do not use a PGDATA pathname containing spaces, or you will

# break postgresql-13-setup.

[Unit]

Description=PostgreSQL 13 database server

Documentation=https://www.postgresql.org/docs/13/static/

After=syslog.target

After=network.target

[Service]

Type=simple

User=postgres

Group=postgres

# Note: avoid inserting whitespace in these Environment= lines, or you may

# break postgresql-setup.

# Location of database directory

Environment=PGDATA=/data1/pg5432/pgdata/

# Where to send early-startup messages from the server (before the logging

# options of postgresql.conf take effect)

# This is normally controlled by the global default set by systemd

# StandardOutput=syslog

# Disable OOM kill on the postmaster

OOMScoreAdjust=-1000

Environment=PG_OOM_ADJUST_FILE=/proc/self/oom_score_adj

Environment=PG_OOM_ADJUST_VALUE=0

ExecStart=/usr/local/postgresql-13.6/bin/postmaster -D ${PGDATA}

ExecReload=/bin/kill -HUP $MAINPID

KillMode=mixed

KillSignal=SIGINT

# Do not set any timeout value, so that systemd will not kill postmaster

# during crash recovery.

TimeoutSec=0

# 0 is the same as infinity, but "infinity" needs systemd 229

TimeoutStartSec=0

TimeoutStopSec=1h

[Install]

WantedBy=multi-user.target

EOF

pg部署.txtpostgresql-13.service.txt

参数优化建议: https://pgtune.leopard.in.ua/

参数 :effective_cache_size

优化器假设可以用于单个查询的磁盘缓存的有效大小。这个因素会被用到使用索引的成本考虑中:值越大,使用索引扫描的可能性就越大;值越小,使用顺序扫描的可能性就越大。

设置该参数的时候,需要同时考虑到shared buffer和内核对磁盘缓存的使用,尽管有些数据会同时存在shared buffer和内核的磁盘缓存中。同时要考虑到在不同的表上并发查询的数量,因为他们也会使用到共享空间。

该参数不会影响分配给postgresql的共享内存,也不保留内核磁盘缓存。只是用于优化器的评估目的。系统也不会假设不同查询之间的数据保留在磁盘缓存上。默认是4GB。

4. 安装timescaleDB插件

从源码安装:https://docs.timescale.com/install/latest/self-hosted/installation-source/

git clone https://github.com/timescale/timescaledb.git

## release notes : https://github.com/timescale/timescaledb/releases

cd timescaledb

git checkout 2.5.2

./bootstrap

需要3.11以上的cmake

yum install cmake3 -y

修改bootstrap脚本,把cmake 改为cmake3 , 或者alias cmake=cmake3

./bootstrap -DUSE_OPENSSL=0

# 切换 root 用户安装

cd ./build && make

make install

# 结束之后:

在配置文件中添加:

shared_preload_libraries = 'timescaledb'

# 重启数据库

systemctl restart postgresql-14

5. 创建业务用户表空间和用户等

# 查看所有表空间

select oid,* from pg_tablespace;

# 创建表空间,用户,数据库

create user zbxuser with login password 'zbx123';

create tablespace zabbix owner zbxuser location '/data/pg5432/pgdata/pg_tablespace';

create database zabbix owner zbxuser encoding utf8 tablespace zabbix;

另外,pg还有schema的概念

zabbix=> create schema zbxuser;

CREATE SCHEMA

zabbix=> \dn

List of schemas

Name | Owner

--------------------------+----------

_timescaledb_cache | postgres

_timescaledb_catalog | postgres

_timescaledb_config | postgres

_timescaledb_internal | postgres

public | postgres

timescaledb_experimental | postgres

timescaledb_information | postgres

zbxuser | zbxuser

(8 rows)

用户建立 的数据库默认是在public模式里

# 建表的时候可以指定创建在哪个schema里

zabbix=> create table zbxuser.t1 ( id int, uname varchar(10));

CREATE TABLE

zabbix=> \dt

List of relations

Schema | Name | Type | Owner

---------+------+-------+---------

zbxuser | t1 | table | zbxuser

(1 row)

如上,zbxuser建立在自己的schema里的表,别的用户是看不到的,除非更改用户的 search_path ,添加zbxuser才能看到。

postgres=# \c zabbix

You are now connected to database "zabbix" as user "postgres".

zabbix=# \dt

Did not find any relations.

zabbix=# \dt

Did not find any relations.

zabbix=# show search_path;

search_path

-----------------

"$user", public

(1 row)

zabbix=# set search_path to "$user", public, zbxuser;

SET

zabbix=# \dt

List of relations

Schema | Name | Type | Owner

---------+------+-------+---------

zbxuser | t1 | table | zbxuser

(1 row)

zabbix=# show search_path;

search_path

--------------------------

"$user", public, zbxuser

(1 row)

配置pg_hba.conf 防火墙

格式:

# TYPE DATABASE USER ADDRESS METHOD

# METHOD can be "trust", "reject", "md5", "password", "scram-sha-256",

# "gss", "sspi", "ident", "peer", "pam", "ldap", "radius" or "cert".

# Note that "password" sends passwords in clear text; "md5" or

# "scram-sha-256" are preferred since they send encrypted passwords.

# 例如

host zabbix zbxuser 192.168.32.71/32 password

# 配置后,库里执行 SELECT pg_reload_conf();

postgres=# SELECT pg_reload_conf();

pg_reload_conf

----------------

t

(1 row)

6. 为指定数据库开启timescaleDB扩展

## CREATE EXTENSION IF NOT EXISTS timescaledb;

zabbix=> CREATE EXTENSION IF NOT EXISTS timescaledb;

WARNING:

WELCOME TO

_____ _ _ ____________

|_ _(_) | | | _ \ ___ \

| | _ _ __ ___ ___ ___ ___ __ _| | ___| | | | |_/ /

| | | | _ ` _ \ / _ \/ __|/ __/ _` | |/ _ \ | | | ___ \

| | | | | | | | | __/\__ \ (_| (_| | | __/ |/ /| |_/ /

|_| |_|_| |_| |_|\___||___/\___\__,_|_|\___|___/ \____/

Running version 2.5.2

For more information on TimescaleDB, please visit the following links:

1. Getting started: https://docs.timescale.com/timescaledb/latest/getting-started

2. API reference documentation: https://docs.timescale.com/api/latest

3. How TimescaleDB is designed: https://docs.timescale.com/timescaledb/latest/overview/core-concepts

Note: TimescaleDB collects anonymous reports to better understand and assist our users.

For more information and how to disable, please see our docs https://docs.timescale.com/timescaledb/latest/how-to-guides/configuration/telemetry.

CREATE EXTENSION

# 查看扩展

zabbix=> \x

Expanded display is on.

zabbix=> \dx

List of installed extensions

-[ RECORD 1 ]------------------------------------------------------------------

Name | plpgsql

Version | 1.0

Schema | pg_catalog

Description | PL/pgSQL procedural language

-[ RECORD 2 ]------------------------------------------------------------------

Name | timescaledb

Version | 2.5.2

Schema | public

Description | Enables scalable inserts and complex queries for time-series data

zabbix=> \x

7. 从库搭建

# 创建repl用户,并授权

create user repl login replication ENCRYPTED PASSWORD 'repl1234';

# 配置主库 pg_hba.conf,允许repl用户可以从从库连接

# 在从库做备份

pg_basebackup -h 192.168.32.128 -p 5432 -U repl -F p -P -R -X stream -D /data1/pg5432/pgdata -W

-h –指定作为主服务器的主机。

-D –指定数据目录。

-U –指定连接用户。

-P –启用进度报告。

-v –启用详细模式。

-R –启用恢复配置的创建:创建一个standby.signal文件,并将连接设置附加到数据目录下的postgresql.auto.conf。

-X –用于在备份中包括所需的预写日志文件(WAL文件)。流的值表示在创建备份时流式传输WAL。

-C –在开始备份之前,允许创建由-S选项命名的复制插槽。

-S –指定复制插槽名称。

SELECT pg_reload_conf();

修改从库配置文件,增加

# standby

primary_conninfo = 'host=192.168.32.128 port=5432 user=repl password=repl1234 application_name=s1'

recovery_target_timeline = latest

hot_standby=on

max_standby_streaming_delay = 30s

wal_receiver_status_interval = 10s

hot_standby_feedback = on

报错:

May 05 14:41:09 test02 postmaster[18467]: 2022-05-05 14:41:09.336 CST [18467] FATAL: data directory "/data1/pg5432/pgdata" has invalid permissions

May 05 14:41:09 test02 postmaster[18467]: 2022-05-05 14:41:09.336 CST [18467] DETAIL: Permissions should be u=rwx (0700) or u=rwx,g=rx (0750).

处理:

chmod 0700 -R /data1/pg5432/pgdata

从主库查看复制状态:

pg_controldata

# 主库

Database cluster state: in production

# 从库

Database cluster state: in archive recovery

# 从库里查看状态

select client_addr,sync_state,application_name from pg_stat_replication;

8. pg归档日志管理

1、在主库,postgresql.conf配置文件配置如下

archive_mode=on

archive_command =‘cp -i %p /u01/pg9.1/archivelog/%f </dev/null’

主库开启归档模式,归档日志写入/pg9.1/data/pg_xlog目录,archive_command把归档日志复制到/u01/pg9.1/archivelog/

2、在备库,archive_mode、archive_command是否注销对备库没有任何影响,备库不会加载该配置。

3、主库归档事务提交后,备库进行相应操作,进行归档,归档日志写入/pg9.1/data/pg_xlog目录,但不执行archive_command命令。

原文链接:https://blog.csdn.net/guoxianqi2012/article/details/7341617

# 查看archive状态

# 切换归档日志

# --- 测试 ----

[postgres@test01 /data1/pg5432/pgdata/pg_wal]# tree

.

├── 000000010000000000000002.00000028.backup

├── 000000010000000000000004.00000028.backup

├── 000000010000000000000006

├── 000000010000000000000007

├── 000000010000000000000008

├── 000000010000000000000009

├── 00000001000000000000000A

├── 00000001000000000000000B

└── archive_status

├── 000000010000000000000002.00000028.backup.done

├── 000000010000000000000004.00000028.backup.done

└── 000000010000000000000006.done

1 directory, 11 files

[postgres@test01 /data1/pg5432/pgdata/pg_wal]# psql -h 127.0.0.1

psql (13.5)

Type "help" for help.

postgres=# select pg_switch_wal();

pg_switch_wal

---------------

0/7000078

(1 row)

postgres=# \q

[postgres@test01 /data1/pg5432/pgdata/pg_wal]# tree

.

├── 000000010000000000000002.00000028.backup

├── 000000010000000000000004.00000028.backup

├── 000000010000000000000006

├── 000000010000000000000007

├── 000000010000000000000008

├── 000000010000000000000009

├── 00000001000000000000000A

├── 00000001000000000000000B

└── archive_status

├── 000000010000000000000002.00000028.backup.done

├── 000000010000000000000004.00000028.backup.done

├── 000000010000000000000006.done

└── 000000010000000000000007.done

1 directory, 12 files

# 修改archive_command测试

# 修改为 archive_command='cp %p /data1/pg5432/archive/%f'

[postgres@test01 /data1/pg5432/pgdata/pg_wal]# tree

.

├── 000000010000000000000002.00000028.backup

├── 000000010000000000000004.00000028.backup

├── 00000001000000000000000B

├── 00000001000000000000000C

├── 00000001000000000000000D

├── 00000001000000000000000E

├── 00000001000000000000000F

├── 000000010000000000000010

└── archive_status

├── 000000010000000000000002.00000028.backup.done

├── 000000010000000000000004.00000028.backup.done

└── 00000001000000000000000B.done

1 directory, 11 files

[postgres@test01 /data1/pg5432/pgdata/pg_wal]# ll /data1/pg5432/archive/

total 16384

-rw------- 1 postgres postgres 16777216 May 9 14:21 00000001000000000000000B

archive_command常用设置: https://blog.csdn.net/ctypyb2002/article/details/82702174

先分析下archive_command中说白了就是对%p 和%f的操作,

%p 要归档的wal路径 %f 归档的wal文件名

# 非压缩

archive_command = 'cp %p /data/backup/pgwalarchive/%f && echo %f >> /data/backup/pgwalarchive/archive.list'

restore_command = 'cp /data/backup/pgwalarchive/%f %p'

9. timescaledb自动分区测试

相关资料连接:https://docs.timescale.com/api/latest/hypertable/create_hypertable/#create-hypertable

ALTER TABLE history RENAME TO history_old;

CREATE TABLE history (

itemid bigint NOT NULL,

clock integer DEFAULT '0' NOT NULL,

value DOUBLE PRECISION DEFAULT '0.0000' NOT NULL,

ns integer DEFAULT '0' NOT NULL,

PRIMARY KEY (itemid,clock,ns)

);

ALTER TABLE history_uint RENAME TO history_uint_old;

CREATE TABLE history_uint (

itemid bigint NOT NULL,

clock integer DEFAULT '0' NOT NULL,

value numeric(20) DEFAULT '0' NOT NULL,

ns integer DEFAULT '0' NOT NULL,

PRIMARY KEY (itemid,clock,ns)

);

ALTER TABLE history_str RENAME TO history_str_old;

CREATE TABLE history_str (

itemid bigint NOT NULL,

clock integer DEFAULT '0' NOT NULL,

value varchar(255) DEFAULT '' NOT NULL,

ns integer DEFAULT '0' NOT NULL,

PRIMARY KEY (itemid,clock,ns)

);

ALTER TABLE history_log RENAME TO history_log_old;

CREATE TABLE history_log (

itemid bigint NOT NULL,

clock integer DEFAULT '0' NOT NULL,

timestamp integer DEFAULT '0' NOT NULL,

source varchar(64) DEFAULT '' NOT NULL,

severity integer DEFAULT '0' NOT NULL,

value text DEFAULT '' NOT NULL,

logeventid integer DEFAULT '0' NOT NULL,

ns integer DEFAULT '0' NOT NULL,

PRIMARY KEY (itemid,clock,ns)

);

ALTER TABLE history_text RENAME TO history_text_old;

CREATE TABLE history_text (

itemid bigint NOT NULL,

clock integer DEFAULT '0' NOT NULL,

value text DEFAULT '' NOT NULL,

ns integer DEFAULT '0' NOT NULL,

PRIMARY KEY (itemid,clock,ns)

);

创建超级表

SELECT create_hypertable('conditions', 'time', chunk_time_interval => 86400000000);

SELECT create_hypertable('history', 'clock', chunk_time_interval => INTERVAL '1 hour');

若要重建策略,先删除原来的策略

SELECT remove_retention_policy(‘test’);

查看策略任务详细信息

select * from timescaledb_information.jobs

查看策略任务执行情况

SELECT * FROM timescaledb_information.job_stats

修改策略执行时间

SELECT alter_job(1015, schedule_interval => INTERVAL ‘6 minutes’);

注:1015是策略任务信息中的 job_id,schedule_interval 表示任务执行周期

其他:开启dbug调试,并查看任务运行情况

SET client_min_messages TO DEBUG1;

CALL run_job(1015);

10. 时间戳和日期的转换

SELECT EXTRACT(epoch FROM NOW());

SELECT EXTRACT(epoch FROM CAST('2017-12-06 00:17:10' AS TIMESTAMP));

如何在不同编程语言中获取现在的Unix时间戳(Unix timestamp)?

Java time

JavaScript Math.round(new Date().getTime()/1000)

getTime()返回数值的单位是毫秒

Microsoft .NET / C# epoch = (DateTime.Now.ToUniversalTime().Ticks - 621355968000000000) / 10000000

MySQL SELECT unix_timestamp(now())

Perl time

PHP time()

PostgreSQL SELECT extract(epoch FROM now())

Python 先 import time 然后 time.time()

Ruby 获取Unix时间戳:Time.now 或 Time.new

显示Unix时间戳:Time.now.to_i

SQL Server SELECT DATEDIFF(s, '1970-01-01 00:00:00', GETUTCDATE())

Unix / Linux date +%s

VBScript / ASP DateDiff("s", "01/01/1970 00:00:00", Now())

其他操作系统

(如果Perl被安装在系统中) 命令行状态:perl -e "print time"

-----------------------------------

时间戳转换

https://blog.51cto.com/u_15064650/4049428

时间戳转换: https://blog.51cto.com/u_15064650/4049428

如何在不同编程语言中获取现在的Unix时间戳(Unix timestamp)?

Java time

JavaScript Math.round(new Date().getTime()/1000)

getTime()返回数值的单位是毫秒

Microsoft .NET / C# epoch = (DateTime.Now.ToUniversalTime().Ticks - 621355968000000000) / 10000000

MySQL SELECT unix_timestamp(now())

Perl time

PHP time()

PostgreSQL SELECT extract(epoch FROM now())

Python 先 import time 然后 time.time()

Ruby 获取Unix时间戳:Time.now 或 Time.new

显示Unix时间戳:Time.now.to_i

SQL Server SELECT DATEDIFF(s, '1970-01-01 00:00:00', GETUTCDATE())

Unix / Linux date +%s

VBScript / ASP DateDiff("s", "01/01/1970 00:00:00", Now())

其他操作系统

(如果Perl被安装在系统中) 命令行状态:perl -e "print time"

如何在不同编程语言中实现Unix时间戳(Unix timestamp) → 普通时间?

-----------------------------------

时间戳转换

[https://blog.51cto.com/u_15064650/4049428](https://blog.51cto.com/u_15064650/4049428)

11. 使用yum安装postgresql

yum install centos-release-scl-rh llvm postgresql14-server

12. pg查看表结构

SELECT a.attnum, a.attname AS field, t.typname AS type, a.attlen AS length, a.atttypmod AS lengthvar

, a.attnotnull AS notnull, b.description AS comment

FROM pg_class c, pg_attribute a

LEFT JOIN pg_description b

ON a.attrelid = b.objoid

AND a.attnum = b.objsubid, pg_type t

WHERE c.relname = 'history'

AND a.attnum > 0

AND a.attrelid = c.oid

AND a.atttypid = t.oid

ORDER BY a.attnum;

13. heap堆表 存储引擎实现原理

https://blog.csdn.net/Z_Stand/article/details/125462047

14. timescale的一些操作

转换为hypertable(执行tsscaleDB 命令)

首先将表转换data为hypertable,仅对column进行时间分区ts,然后在point四个分区上添加一个额外的分区键:

#将普通表转换为时序表(对时间进行分区)

SELECT create_hypertable(‘data’, ‘ts’);

SELECT create_hypertable(‘data’, ‘ts’, chunk_time_interval => INTERVAL ‘1 day’);

#或则可以给时间块添加时间间隔,默认为7天(1.5版本以前为30天)

SELECT add_dimension(‘data’, ‘ts’, chunk_time_interval => INTERVAL ‘1 day’);

#重新设置时间分区间隔,只会对新创建的分区生效

SELECT set_chunk_time_interval(‘data’, INTERVAL ‘24 hours’);

SELECT set_chunk_time_interval(‘data’, 86400000000);

SELECT set_chunk_time_interval(‘data’, 86400000); #unix是毫秒

另外也可以添加空间分区

#直接创建

SELECT create_hypertable(‘data’, ‘ts’, ‘point’, 4); #添加4个空间分区,根据point划分分区

#若hypertable已存在,可以采用 add_dimension直接进行添加,number_partitions为空间分区的个数,必须指定

SELECT create_hypertable(‘data’, ‘ts’);

SELECT add_dimension(‘data’, ‘point’, number_partitions => 4);

#重新设置空间分区,只会对新创建的分区生效

SELECT set_number_partitions(‘data’, 2);#单个维度

SELECT set_number_partitions(‘data’, 2, ‘device_id’); #多个维度

创建索引

#创建索引(索引可以针对块进行事物,而不是整个hypertable)

CREATE INDEX ON data(ts, point) USING brin WITH (tsscaledb.transaction_per_chunk);

批量删除

drop_chunks()

删除时间范围完全落在指定时间之前(或之后)的数据块,(不同与delete,直接删除磁盘文件也会清除)

older_than 截止点的规范,所有早于此时间戳的完整块都应删除。

table_name 从中删除块的hypertable或连续聚合。

newer_than 截止点的规范,所有比此时间戳新的完整块都应删除。

可选参数

cascade 数据级联,默认为FALSE

实例

#保留最近三个月以内的数据

SELECT drop_chunks(INTERVAL '3 months', 'data');

#删除早于三个月以前的所有数据,包括相关对象(例如,视图):

SELECT drop_chunks(INTERVAL '3 months', 'data', cascade => TRUE);

#删除,前四个月到前三个月之间的数据

SELECT drop_chunks(older_than => INTERVAL '3 months', newer_than => INTERVAL '4 months', table_name => 'data')

#删除超过未来三个月的数据

SELECT drop_chunks(newer_than => now() + INTERVAL '3 months', table_name => 'data');

#删除2017年之前所有的数据

SELECT drop_chunks(DATE '2017-01-01', 'data');

SELECT drop_chunks(1483228800000, 'data'); # 时间戳操作也可以

PG主从状态查询

1. 查看主从复制状态

这些信息要在主库中查询

查看流复制的信息可以使用主库上的视图

select pg_is_in_recovery();

select pid,state,client_addr,sync_priority,sync_state from pg_stat_replication;

pg_stat_replication中几个字断记录了发送wal的位置及备库接收到的wal的位置、

sent_location–发送wal的位置

write_location–备库接收到的wal的位置

flush_location—备库写wal日志到磁盘的位置

replay_location—备库应用日志的位置

2. 查看备库落后主库多少字节

select pg_wal_lsn_diff(pg_current_wal_lsn(),replay_lsn) from pg_stat_replication;

3. 级联复制

select pg_xlog_location_diff(pg_last_xlog_replay_location(),replay_location)/1024/1024/1024 as GB from pg_stat_replication;

补充:pgsql之查看主备复制延迟

10.0及以上

SELECT

pg_wal_lsn_diff(A .c1, replay_lsn) /(1024 * 1024) AS slave_latency_MB,

pg_wal_lsn_diff(A .c1, sent_lsn) /(1024 * 1024) AS send_latency_MB,

pg_wal_lsn_diff(A .c1, flush_lsn) /(1024 * 1024) AS flush_latency_MB,

state,

backend_start,

now()::timestamp with time zone

FROM pg_stat_replication, pg_current_wal_lsn() AS A(c1)

WHERE client_addr='192.168.46.173' and application_name = 'standby1'

ORDER BY slave_latency_MB, send_latency_MB DESC

LIMIT 1;

PG分区表操作

大家知道 PostgreSQL 的分区是通过继承来实现的,按分区方式,可以实现表的列表分区,范围分区,以及复合分区等。

1. 查看分区表

SELECT

nmsp_parent.nspname AS parent_schema,

parent.relname AS parent,

nmsp_child.nspname AS child,

child.relname AS child_schema

FROM

pg_inherits

JOIN pg_class parent ON pg_inherits.inhparent = parent.oid

JOIN pg_class child ON pg_inherits.inhrelid = child.oid

JOIN pg_namespace nmsp_parent ON nmsp_parent.oid = parent.relnamespace

JOIN pg_namespace nmsp_child ON nmsp_child.oid = child.relnamespace

WHERE

parent.relname = 'table_name';

2. 查看分区表对应的分区个数

SELECT

nspname,

relname,

COUNT(*) AS partition_num

FROM

pg_class c,

pg_namespace n,

pg_inherits i

WHERE

c.oid = i.inhparent

AND c.relnamespace = n.oid

AND c.relhassubclass

AND c.relkind = 'r'

GROUP BY

1,

2

ORDER BY

partition_num DESC;

一些管理查询命令

1. 查询表或者索引的大小

select relname,pg_size_pretty(pg_relation_size(oid)) from pg_class where relname ='t2_id_brin_inx';

或

select pg_size_pretty(pg_relation_size('表名'));

2. 查出所有表(包含索引)并排序

SELECT table_schema || '.' || table_name AS table_full_name, pg_size_pretty(pg_total_relation_size('"' || table_schema || '"."' || table_name || '"')) AS size

FROM information_schema.tables

ORDER BY

pg_total_relation_size('"' || table_schema || '"."' || table_name || '"') DESC limit 20;

3. 查出表大小按大小排序并分离data与index

SELECT

table_name,

pg_size_pretty(table_size) AS table_size,

pg_size_pretty(indexes_size) AS indexes_size,

pg_size_pretty(total_size) AS total_size

FROM (

SELECT

table_name,

pg_table_size(table_name) AS table_size,

pg_indexes_size(table_name) AS indexes_size,

pg_total_relation_size(table_name) AS total_size

FROM (

SELECT ('"' || table_schema || '"."' || table_name || '"') AS table_name

FROM information_schema.tables

) AS all_tables

ORDER BY total_size DESC

) AS pretty_sizes;

4. 查看数据库中各个数据库占磁盘的大小

SELECT d.datname AS Name, pg_catalog.pg_get_userbyid(d.datdba) AS Owner,

CASE WHEN pg_catalog.has_database_privilege(d.datname, 'CONNECT')

THEN pg_catalog.pg_size_pretty(pg_catalog.pg_database_size(d.datname))

ELSE 'No Access'

END AS SIZE

FROM pg_catalog.pg_database d

ORDER BY

CASE WHEN pg_catalog.has_database_privilege(d.datname, 'CONNECT')

THEN pg_catalog.pg_database_size(d.datname)

ELSE NULL

END DESC;

5. 统计数据库中各表占用磁盘大小

SELECT

table_schema || '.' || table_name AS table_full_name,

pg_size_pretty(pg_total_relation_size('"' || table_schema || '"."' || table_name || '"')) AS size

FROM information_schema.tables

ORDER BY

pg_total_relation_size('"' || table_schema || '"."' || table_name || '"') DESC;

6. 查询表定义

SELECT

a.attname as col_name,

format_type(a.atttypid,a.atttypmod) as col_type,

a.attnotnull as is_null,

col_description(a.attrelid,a.attnum) as col_comment

FROM pg_class as c,pg_attribute as a

WHERE

a.attrelid = c.oid

and a.attnum>0

and c.relname = '表名';

pg逻辑复制搭建(从9.6到14.2)

参考文档:https://blog.csdn.net/dazuiba008/article/details/114934864

逻辑复制依赖pglogical扩展,需要安装此扩展

准工作

wget https://download.postgresql.org/pub/repos/yum/reporpms/EL-7-x86_64/pgdg-redhat-repo-latest.noarch.rpm --no-check-certificate

sudo yum install pgdg-redhat-repo-latest.noarch.rpm -y

1. pg9.6.24安装pglogical扩展(yum安装)

curl https://techsupport.enterprisedb.com/api/repository/dl/default/release/9.6/rpm | bash

yum install postgresql96-server postgresql96-contrib postgresql96 postgresql96-pglogical

2. pg14安装扩展(yum安装)

curl https://techsupport.enterprisedb.com/api/repository/dl/default/release/14/rpm | bash

sudo yum install postgresql14-pglogical postgresql14-server postgresql14 postgresql14-contrib

安装完成之后注意修改 /usr/lib/systemd/system/postgresql-14.service 的PGDATA变量

源节点IP:10.4.9.166

目标节点IP:10.4.9.250

#配置pg_hba.conf文件,测试服务器均为10网段,为了方便,我加入以下条目

host all all 10.0.0.0/8 trust

#以下操作在源节点操作,创建role,database,table并写入数据

postgres=# create role logic login ;

CREATE ROLE

postgres=# create database logic with owner postgres;

CREATE DATABASE

postgres=# grant all on DATABASE logic to logic with grant option;

GRANT

postgres=# \c logic logic

You are now connected to database "logic" as user "logic".

logic=> create schema logic;

CREATE SCHEMA

logic=> grant USAGE on SCHEMA logic to logic ;

GRANT

logic=> create table tbl_logic(a int,b text);

CREATE TABLE

logic=> insert into tbl_logic values (1,'replica set');

INSERT 0 1

logic=> select * from tbl_logic ;

a | b

---+-------------

1 | replica set

(1 row)

logic=# alter table logic.tbl_logic add primary key(a);

ALTER TABLE

#使用超级用户安装插件

logic=> \c logic postgres

You are now connected to database "logic" as user "postgres".

logic=# create extension pglogical;

CREATE EXTENSION

logic=# \dx

List of installed extensions

Name | Version | Schema | Description

-----------+---------+------------+--------------------------------

pglogical | 2.3.3 | pglogical | PostgreSQL Logical Replication

plpgsql | 1.0 | pg_catalog | PL/pgSQL procedural language

(2 rows)

#创建provider node

SELECT pglogical.create_node(

node_name := 'logic_pub',

dsn := 'host=10.4.9.166 port=1921 user=postgres dbname=logic'

);

#可以复制某个schema下所有表

SELECT pglogical.replication_set_add_all_tables('default', ARRAY['hank']);

#也可以指定表

select pglogical.replication_set_add_table('default',relation:='logic.tbl_logic'::regclass,synchronize_data:=true);

replication_set_add_table

---------------------------

t

(1 row)

#以下操作均在目标节点操作

postgres=# create role logic login ;

CREATE ROLE

postgres=# create database logic with owner postgres;

CREATE DATABASE

postgres=# grant all on DATABASE logic to logic with grant option;

GRANT

postgres=# \c logic logic

You are now connected to database "logic" as user "logic".

logic=> create schema logic;

CREATE SCHEMA

logic=> grant USAGE on SCHEMA logic to logic ;

GRANT

logic=> \c logic postgres

logic=# create extension pglogical;

CREATE EXTENSION

#创建subscriber node,注意IP为目标复制节点IP

SELECT pglogical.create_node(

node_name := 'logic_sub',

dsn := 'host=10.4.9.250 port=1921 user=postgres dbname=logic'

);

#创建subscription,ip以及其他信息写源地址相关信息

SELECT pglogical.create_subscription(

subscription_name := 'logic_subscription',

provider_dsn := 'host=10.4.9.166 port=1921 user=postgres dbname=logic'

);

#可见

logic=# select * from logic.tbl_logic ;

a | b

---+-------------

1 | replica set

(1 row)\q

pg vacuum操作

1. vacuum的命令

2. 查询后台运行的进程并停止

SELECT pid, query FROM pg_stat_activity WHERE query ILIKE '%VACUUM%';

postgres=# SELECT pg_cancel_backend(13733);

pg_cancel_backend

-------------------

t

(1 row)

3.查看vacuum的进度:

postgres=# select * from pg_stat_progress_vacuum ;

pid | datid | datname | relid | phase | heap_blks_total | heap_blks_scanned | heap_blks_vacuumed | index_vacuum_count | max_dead_tuples | num_dead_tuples

-------+-------+----------+-------+----------------+-----------------+-------------------+--------------------+--------------------+-----------------+-----------------

13733 | 12407 | postgres | 20248 | scanning heap | 37085959 | 6327585 | 3646346 | 1 | 44739242 | 36070148

16616 | 12407 | postgres | 20251 | vacuuming heap | 380255808 | 40738228 | 35003289 | 2 | 44739242 | 44738954

(2 rows)

pg 日期计算

select NOW() - interval '2 day';

select NOW() - interval '2 hour';

日期加法

select now()::timestamp(0)+'1year' as nextyear ;

select now()::timestamp(0)+'1month' as nextmonth ;

select now()::timestamp(0)+'1day' as nextday ;

select now()::timestamp(0)+'1hour' as nexthour ;

select now()::timestamp(0)+'1minute' as nextminute ;

select now()::timestamp(0)+'1second' as nextsecond ;

日期减法

select now()::timestamp(0)+'-1year' as lastyear ;

select now()::timestamp(0)+'-1month' as lastmonth ;

select now()::timestamp(0)+'-1day' as lastday ;

select now()::timestamp(0)+'-1hour' as lasthour ;

select now()::timestamp(0)+'-1minute' as lastminute ;

select now()::timestamp(0)+'-1second' as lastsecond ;

注:日期减法时只能改变字符串中的数字为负数才生效

如果如下写法则是错误的select now()::timestamp(0)-'1second' as lastsecond ;

混合运算

select now()::timestamp(0)+'1year 1 month 1day 1hour 1minute' as next ;

select now()::timestamp(0)+'-1year 1 month 1day 1hour 1minute' as last;

pg创建表create table … like …

如果希望复制注释,那么需要指定INCLUDING COMMENTS,很明显,这也是我们需要的。至此我们已经可以筛选出我们需要的东西了,下面通过标记看一下:

including constraints :没有CHECK约束,所以不考虑

including indexes :需要主键约束

including comments:需要注释

including defaults:不需要复制序列,所以不要

结论是我们的LIKE选项为:INCLUDING INDEXES INCLUDING COMMENTS,所以这次就能复制一个“最贴近我们需求”的表了:

create table t_key_event_file_student_103 (like t_key_event_file_student INCLUDING INDEXES INCLUDING COMMENTS);

修改表名:

alter table nodestore_node rename to nodestore_node_bak02;

pg truncate table很慢

TRUNCATE必须刷新shared_buffers被截断的表,并且必须取消链接旧文件,这在文件系统上可能很慢,如删除速度慢ext3.

1.5小时是非常极端的,因为我们通常最多只谈几秒钟.很可能你有其他会话持有表上的锁,阻止TRUNCATE进行.见pg_catalog.pg_locks和pg_catalog.pg_stat_activity.

关于锁监控的PostgreSQL wiki文章应该是有用的.

另请参见:Postgresql截断速度

查询是否被某些进程阻止

SELECT

activity.pid,

activity.usename,

activity.query,

blocking.pid AS blocking_id,

blocking.query AS blocking_query

FROM pg_stat_activity AS activity

JOIN pg_stat_activity AS blocking ON blocking.pid = ANY(pg_blocking_pids(activity.pid));

如有必要终止它 SELECT pg_terminate_backend(PID);

评论区